Understanding

the neural basis of higher

cognitive functions, such as those involved in language, in

planning,

in logics,

requires as a very prerequisite a shift from mere localization,

which

has been

popular with imaging research, to an analysis of network

operation. A

recent

proposal (Hauser, Chomsky & Fitch, Science, 2002)

points at infinite recursion as the core of several

higher functions, and thus challenges cortical network theorists

to

describe network

behavior that could subserve infinite

recursion.

Considering a class of reduced Potts models (Kanter,

PRL, 1988) of large semantic associative networks [1,2], their

storage

capacity

has been studied analytically with statistical physics methods

[3,4],

and their dynamics simulated, once the units are endowed with a

simple

model of

firing frequency adaptation. Such models naturally display

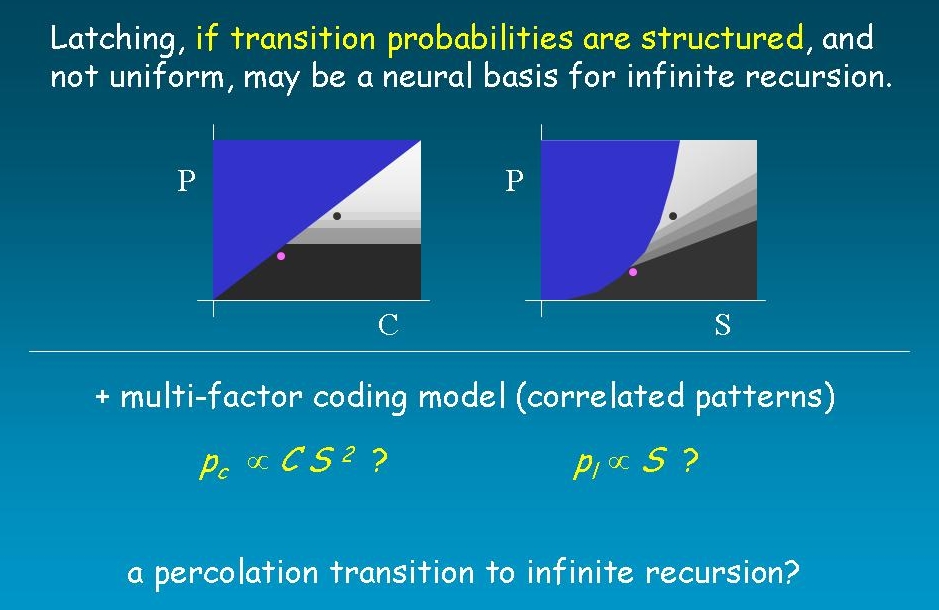

latching

dynamics,

i.e. they hop from one attractor to the next following a

stochastic

process

based on the correlations among attractors. The proposal is that

such

latching

dynamics may be associated with a network capacity for infinite

recursion, in

particular because it turns out, from the simulations and from

analytical

arguments [3], that latching only occurs after a percolation

phase transition, once

the network

connectivity becomes sufficiently extensive to support structured

transition

probabilities between global network states (work in progress by

ER, AT). The crucial development

endowing a

semantic system with a non-random dynamics would thus be an

increase in

connectivity, perhaps to be identified with the dramatic increase

in

spine

numbers recently observed in the basal dendrites of pyramidal

cells in

Understanding

the neural basis of higher

cognitive functions, such as those involved in language, in

planning,

in logics,

requires as a very prerequisite a shift from mere localization,

which

has been

popular with imaging research, to an analysis of network

operation. A

recent

proposal (Hauser, Chomsky & Fitch, Science, 2002)

points at infinite recursion as the core of several

higher functions, and thus challenges cortical network theorists

to

describe network

behavior that could subserve infinite

recursion.

Considering a class of reduced Potts models (Kanter,

PRL, 1988) of large semantic associative networks [1,2], their

storage

capacity

has been studied analytically with statistical physics methods

[3,4],

and their dynamics simulated, once the units are endowed with a

simple

model of

firing frequency adaptation. Such models naturally display

latching

dynamics,

i.e. they hop from one attractor to the next following a

stochastic

process

based on the correlations among attractors. The proposal is that

such

latching

dynamics may be associated with a network capacity for infinite

recursion, in

particular because it turns out, from the simulations and from

analytical

arguments [3], that latching only occurs after a percolation

phase transition, once

the network

connectivity becomes sufficiently extensive to support structured

transition

probabilities between global network states (work in progress by

ER, AT). The crucial development

endowing a

semantic system with a non-random dynamics would thus be an

increase in

connectivity, perhaps to be identified with the dramatic increase

in

spine

numbers recently observed in the basal dendrites of pyramidal

cells in In

a collaboration

with Susan Rothstein at

In parallel, we are conducting psychophysical experiments that closely model those by Onnis et al (2003), on the influence of variability in the statistical learning of correlations (NvR & AG).